There is no denying that we are moving toward autonomous systems, and that includes vehicles. We can admit that self-driving is not as common as some of us may have thought a few years ago, but it’s getting there. The problem is, it’s complicating some issues, including who’s responsible for an autonomous crash.

It used to be simple; someone ran a red light or didn’t check their blind spot, and that’s who caused the accident. Now that clarity is fading. Self‑driving cars are here, not in the distant future, but quietly traveling alongside us on the roads.

They’re raising big questions like, if the car drives itself and crashes, is it the person’s fault? The company’s? The car’s software? Those answers are still not clear and often require some investigation.

The Old Way of Determining Fault

Traditionally, if a crash happened, investigators considered it human error. Was the driver distracted? Did they speed, fail to yield, or ignore road signs? Fault was almost always about behavior behind the wheel. The legal structure — negligence, liability, duty of care — all assumed a person was in charge.

With autonomous vehicles, the driver might not even touch the wheel. The car is making decisions based on sensors and code. So if something goes wrong, the old framework suddenly looks shaky.

A fully autonomous vehicle might cruise down the highway, change lanes, and slow for traffic, all without any help. If it crashes, the focus turns to the system. Did a sensor glitch? Did the algorithm misinterpret a pedestrian crossing? Was there a software update that hadn’t been patched?

These are no longer questions about poor driving. They’re about engineering, design, and reliability. The legal term for this is product liability. It means the company that built or programmed the system could be held responsible if the technology failed to work as promised.

That’s a big shift. It drags engineers, software developers, and corporate legal teams into the accident report. Instead of breathalyzer results or brake marks on the pavement, the evidence may come from log files and algorithm audits.

Semi-Autonomous Cars

Most cars on the road today aren’t fully self‑driving. Instead, some have advanced features like adaptive cruise control, lane keeping, or emergency braking, but a human is still expected to stay alert. That in‑between space is legally messy.

If a driver zones out because they trust the car too much, are they at fault? Or is the automaker to blame for overselling the system’s abilities? Some companies even call their tech “autopilot,” which can give the wrong impression.

Courts are already seeing cases where responsibility is split. A person might have misused the feature, but the system also didn’t behave the way it was supposed to. The result is a tangled mix of negligence and design flaws, with no obvious answer.

Insurance Is Struggling Too

Car insurance is built on the assumption that humans make mistakes. You get rewarded for safe driving and penalized for accidents. But if your car makes the call, how would that change?

Some insurers are testing new approaches. They might base premiums on the system’s safety record instead of your own. Others are waiting and watching how the legal landscape changes before shifting their models.

When a crash happens, the claims process can get complicated. The driver, the automaker, and the software provider may all be involved. Each has an interest in blaming the other. Without new laws, these fights will keep happening.

The Legal System Isn’t Ready Yet

Traffic laws assume that people are making choices behind the wheel. Reckless driving, speeding, and failure to yield are all based on human decisions. But what happens when the car makes the call?

Some states have started to update their rules, but nationwide, the law hasn’t caught up. That means an accident in one state could be judged differently in another. There’s no unified standard for what counts as negligence when the car is doing the driving.

Lawyers need a new toolkit. A Belleville car crash attorney handling an autonomous crash has to look beyond witness statements. They need to understand how the vehicle processed its surroundings, what the software was supposed to do, and whether a malfunction occurred.

It’s easy to imagine there’s always one person or party to blame. But autonomous crashes often involve multiple layers. Maybe the driver didn’t react in time, but only because they relied on a system that didn’t perform as expected. Or maybe the system worked fine, and another driver caused the collision.

More and more, the idea of shared fault is taking hold. Courts are starting to see these incidents as complex events where blame isn’t all-or-nothing. That reflects the reality of how these systems work in the wild.

Why It Matters Now

Self‑driving cars aren’t in beta anymore. They’re deployed in dozens of cities, handling rides, deliveries, and real-time decisions. As more of these cars hit the roads, the stakes increase.

Each crash becomes a legal milestone. It sets a precedent — not just for who pays, but for how we define accountability in a machine-run world. If a self-driving system is usually safe, is that enough? Or do we expect it to outperform humans in every situation?

These aren’t just questions for lawyers. They affect how cities regulate traffic, how carmakers design their systems, and how we, as a society, decide what’s acceptable risk when the driver isn’t a person anymore.

Technology will keep moving and full autonomy is coming. Maybe slower than some predicted, but it’s on the horizon. However, the law will take time to catch up.

Eventually, we’ll see new legal categories, updated insurance structures, and possibly even federal standards. Until then, every incident is part of shaping what that future looks like. Responsibility is evolving. But it’s not vanishing. Whether it’s a person behind the wheel, an engineer behind the code, or a company deploying the system, someone will have to answer when things go wrong.

P.S. Before you zip off to your next Internet pit stop, check out these 2 game changers below - that could dramatically upscale your life.

1. Check Out My Book On Enjoying A Well-Lived Life: It’s called "Your To Die For Life: How to Maximize Joy and Minimize Regret Before Your Time Runs Out." Think of it as your life’s manual to cranking up the volume on joy, meaning, and connection. Learn more here.

2. Life Review Therapy - What if you could get a clear picture of where you are versus where you want to be, and find out exactly why you’re not there yet? That’s what Life Review Therapy is all about.. If you’re serious about transforming your life, let’s talk. Learn more HERE.

Think happier. Think calmer.

Think about subscribing for free weekly tools here.

No SPAM, ever! Read the Privacy Policy for more information.

One last step!

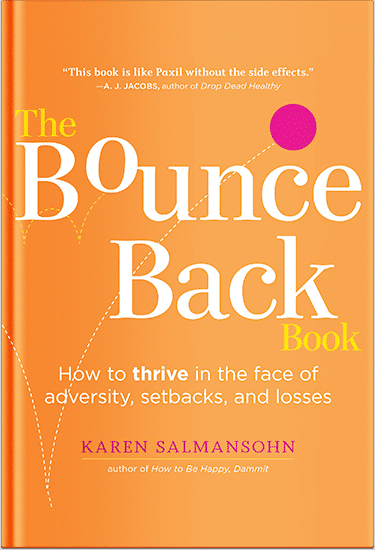

Please go to your inbox and click the confirmation link we just emailed you so you can start to get your free weekly NotSalmon Happiness Tools! Plus, you’ll immediately receive a chunklette of Karen’s bestselling Bounce Back Book!